2. Infrastructure

The infrastructure documentation is hosted at the link below

https://confluence.man.poznan.pl/community/display/WFMS

In a workflow, physics modules exchange physics data in the form of standardised blocks of information: the Consistent Physical Objects (CPOs). The list of CPOs as well as their inner structure defines the EU-IM Data Structure. All physics modules should use these standardised interfaces for I/O.

The most recent datastructure for EU-IM workflows can be browsed at the link below

2.1. Kepler

2.1.1. Introduction to Kepler - basics

Kepler is a workflow engine and design platform for analyzing and modeling scientific data. Kepler provides a graphical interface and a library of pre-defined components to enable users to construct scientific workflows which can undertake a wide range of functionality. It is primarily designed to access, analyse, and visualise scientific data but can be used to construct whole programs or run pre-existing simulation codes.

Kepler builds upon the mature Ptolemy II framework, developed at the University of California, Berkeley. Kepler itself is developed and maintained by the cross-project Kepler collaboration.

The main components in a Kepler workflow are actors, which are used in a design (inherited from Ptolemy II) that separates workflow components (“actors”) from workflow orchestration (“directors”), making components more easily reusable. Workflows can work at very levels of granularity, from low-level workflows (that explicitly move data around or start and monitor remote jobs, for example) to high-level workflows that interlink complex steps/actors. Actors can be reused to construct more complex actors enabling complex functionality to be encapsulated in easy to use packages. A wide range of actors are available for use and reuse.

2.1.1.1. Installing Kepler and tutorial workflows

You can download Kepler from the following page https://kepler-project.org/users/downloads

In order to install Kepler and tutorial related workflows you have to follow the instruction at

Now you can start Kepler application and proceed to tutorial examples

2.1.2. Kepler IMAS actors

Imas actor |

Deacription |

|---|---|

UALSliceCollector |

Store one slice from input IDS into

different run. This way, it is possible

to collect intermediate results during

workflow execution.

|

UALPython |

Allows to run external Python process and

pass input/output data between workflow

and process itself. This actor is, most

commonly, used for data visualization.

User can pass Python script directly to

the actor.

|

UALMuxParam |

Provides similar behavior to

ualmux/UALMux, however, this actors has

two additional ports:

- fieldDescription - contains name of

the filed that will be modified

- fieldValue - contains new value of

the field

Main difference between ualmux/

ualmuxparam

actors lays in it’s ability to be used

in

a loop that modify different field

inside IDS.

You can simply provide different field

name

for different loop’s step.

|

UALMux |

Provides a method for putting data inside

IDS inputs

- inputIds/inputCpo - cpo we are going

to modify

- inTime - time index at which data are

supposed to be updated

- name of the field is specified as port

name

- new value of the field is passed as

value sent to the port

outputs:

- outputIds/outputCpo - modified IDS

- outTime - actual time index (depend

on approximation mode)

|

UALInit |

Initializes input pulse file, creates

run work and provides ID S description

for other actors

inputs:

- user - name of the user for input

data file (e.g. g2michal)

- machine - name of the machine for

input data file (e.g. test/jet)

- shot - shot number

- run - run number

- runwork - temporary run number

(place where output will be stored)

outputs:

- error - description of error in case

there are problems while accessing

input data

- all IDSess requested by user (each

IDS is specified as output port

name)

|

UALDemux |

Allows to read data from given IDS -

name of the field is specified as

output port.

|

UALCollector |

Stores input IDSess inside new run.

This way, it is possible to copy data

into different shot/run rather than

inside run work.

|

UALClose |

Closes run work based on description

passed via input IDS.

|

SetBreakpoint |

This actor allows to enforce “debug”

mode for IMAS based actors. It sets

global parameter “ITM_DEBUG” to either

true or false. In case true is a value

of “ITM_DEBUG” all FC2K generated actors

will start in debug mode.

|

RecordSet |

Sets values inside record (take a look

for short tutorial: RecordGet/RecordSet)

|

RecordGet |

Gets values from the record (take a

look here for short tutorial:

RecordGet/RecordSet)

|

IDSOccurence |

Provides a method to create duplicate

of IDS with new occurrence number.

This way, it is possible to store data

before they get modified by user code.

|

IDSFlush |

Flushes data from workflow. Data from

memory cache are stored inside database

|

IDSDiscard |

Discards data inside workflow. Data will

be re-read into memory cache.

|

IDSContentStd |

Displays IDS data on console (better

for huge data sets)

|

IDSContent |

Displays IDS data

|

2.1.3. IMAS Kepler based configuration

2.1.3.1. Running Kepler using IMAS environment

2.1.3.1.1. Setting up environment

Please do not forget to set JAVA memory settings:

export _JAVA_OPTIONS="-Xss20m -Xms8g -Xmx8g"

2.1.3.1.1.1. Backing up old files

Before first configuration of Kepler, make sure to backup your old data files

cd ~

mv .kepler .kepler~

mv KeplerData KeplerData~

mv .ptolemyII .ptolemyII~

2.1.3.1.2. Creating place to store your personal installations of Kepler

IMAS based installations are stored inside $HOME/kepler directory.

Before proceeding further, make sure to create kepler directory

# create directory inside $HOME

cd ~

mkdir kepler

2.1.3.1.3. Running Kepler (default release)

- In order to start Kepler you have use helper scripts that will install and configure your personal copy of Kepler

load IMAS module

module load imas module load kepler # NOTE! It might be that you don't have Kepler copy inside your $HOME # in that case you need to install it kepler_install_light

Start Kepler

# run alias that will execute Kepler kepler

2.1.4. FC2K - Embedding user codes into Kepler

This tutorial is designed to introduce the concept of using FC2K tool in order to build Kepler compatible actors.

This tutorial explains

how to set up codes for FC2K

how to build actor using FC2K

how to incorporate actor within Kepler workflow

2.1.4.1. FC2K basics

2.1.4.1.1. What FC2K actually does?

Generates a Fortran/CPP wrapper, which intermediates between Kepler actor and user code in terms of:

reading/writing of in/out physical data (IDS)

passing arguments of standard types to/from the actor

Creates a Kepler actor that:

calls a user code

provides error handling

calls debugger (if run in “debug” mode)

calls batch submission commands for MPI based actors

2.1.4.1.2. FC2K main window

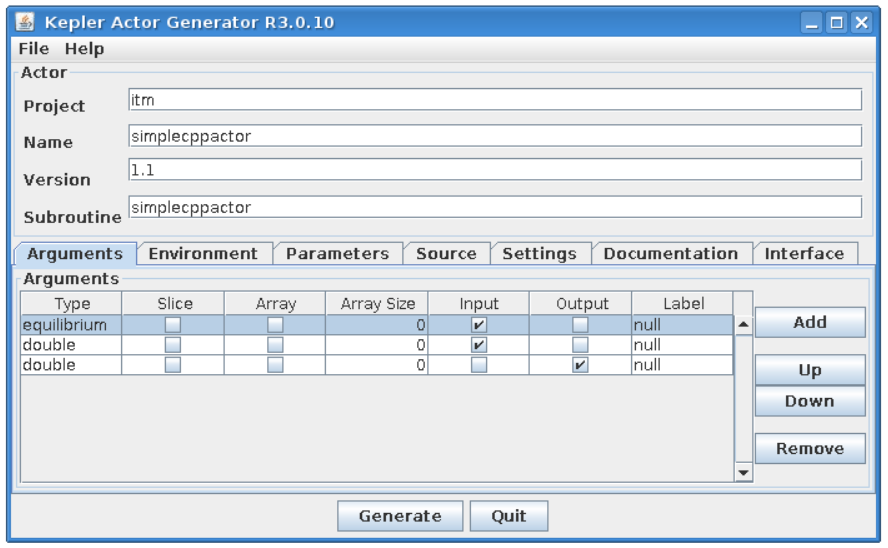

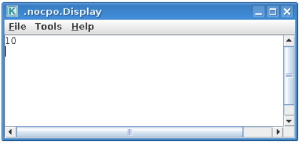

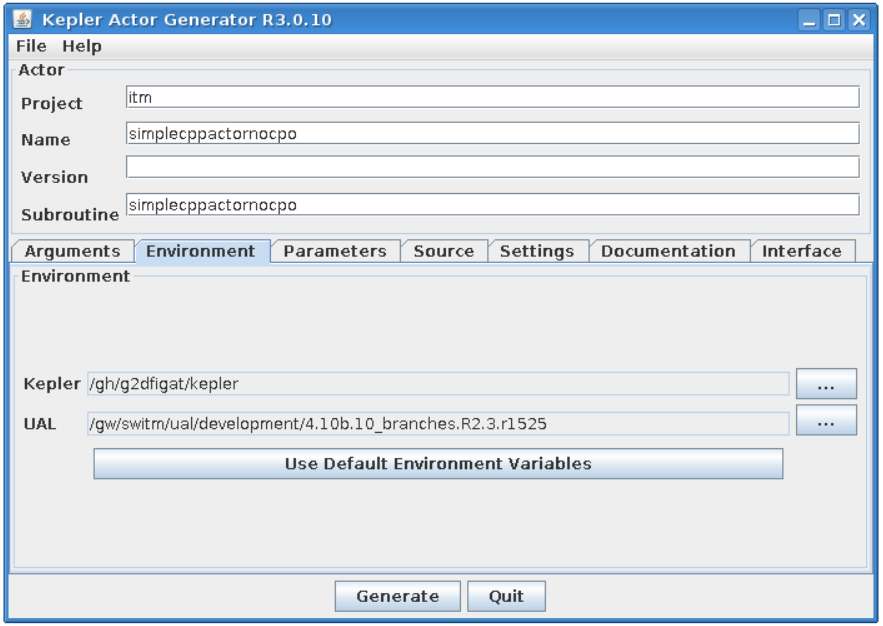

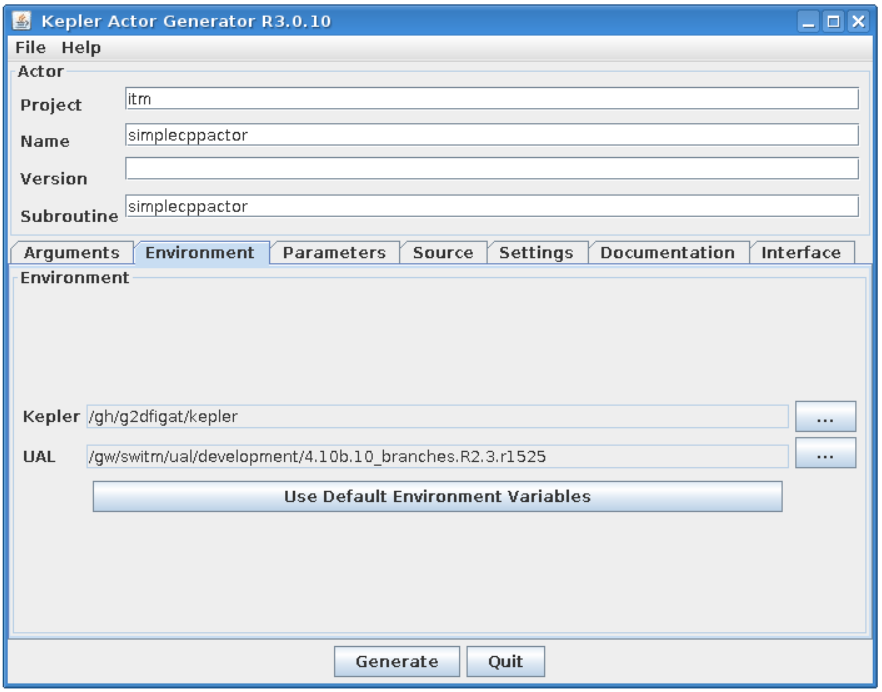

2.1.4.1.3. Actor description

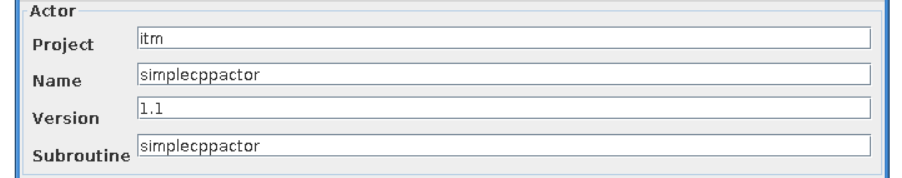

This group of graphical controls allows to set the description of the actor and its “place” in hierarchy of Kepler elements in Kepler “Component” browser

Project - defines a branch in Kepler “Component” browser where an actor will be placed

Name - a user defined name of the actor

Version - a user defined version of user codes

Subroutine - A name of user subroutine (Fortran) or function (C++)

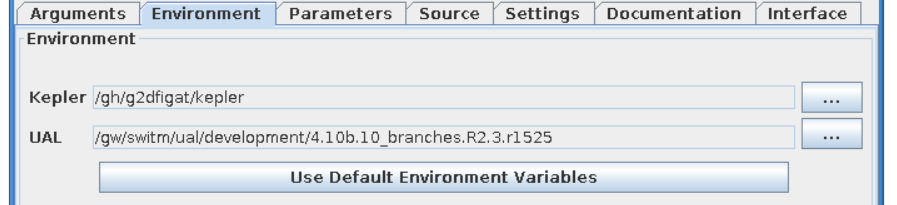

2.1.4.1.4. Environment

The Environment text fields shows UAL and Kepler locations.

Kepler - Kepler location (usually the same as $KEPLER)

UAL - IMAS UAL location (usually the same as $IMAS_PREFIX)

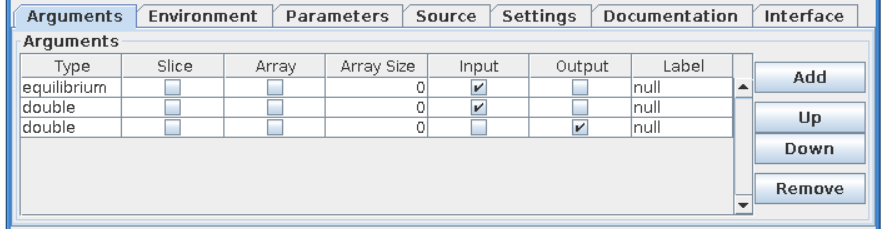

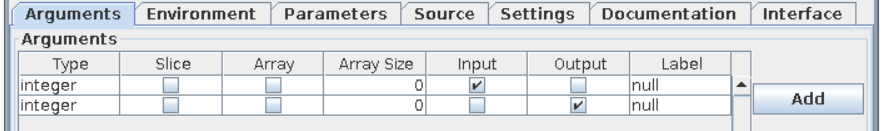

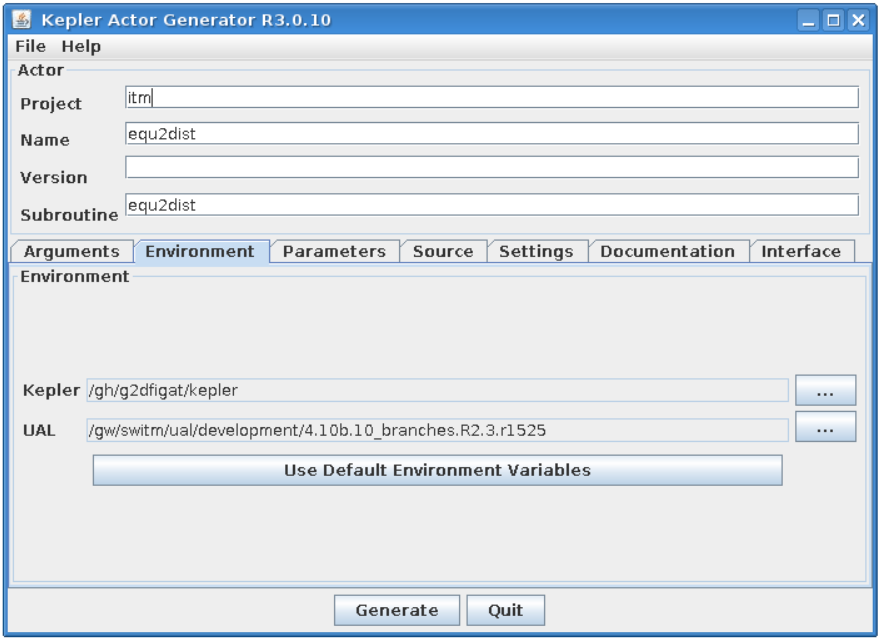

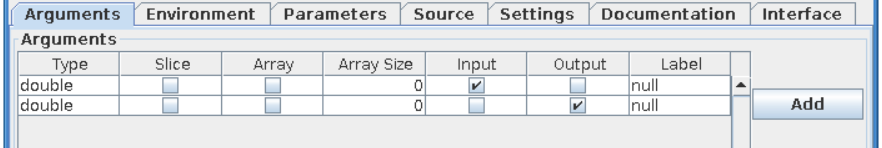

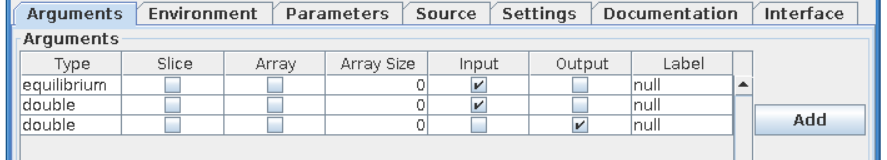

2.1.4.1.5. “Arguments” tab explained

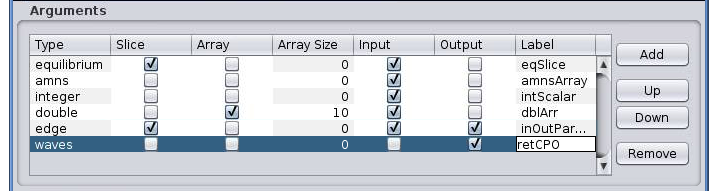

Below you can find explanation of FC2K arguments tab.

Type - Defines a type of an argument. It is possible to choose either IDS based type (e.g. equilibrium, topinfo, etc.) or primitive type (e.g. int, long, double, char)

Single slice - Determines if IDS is passed as single slice or an array. (This setting is valid for IDS types only )

if turned ON - Only one slice is passed. An actor will get an additional port to define a time.

if turned OFF - All IDSes for given shot run is passed.

Is array - Determines if a primitive type is passed as a scalar or an array

if turned ON - An argument is passed as an array. It requires definition of array size (dynamic array are not supported)

if turned OFF - An argument is passed as a scalar.

Array size - Defines the size of an array of primitive types

Input - Defines argument as an input

Output - Defines argument as an output

Label - User defined name of an argument (and actor port)

Please take a look on a screenshot above:

equilibrium - an input parameter - one IDS (slice)

amns - an input parameter - all amns IDS slices stored in given shot/run

integer - an input parameter - a scalar

double - an input parameter - an array of size 10

edge - an in/out parameter - single slice of “edge” IDS

waves - an output parameter - all slices of “waves” IDS

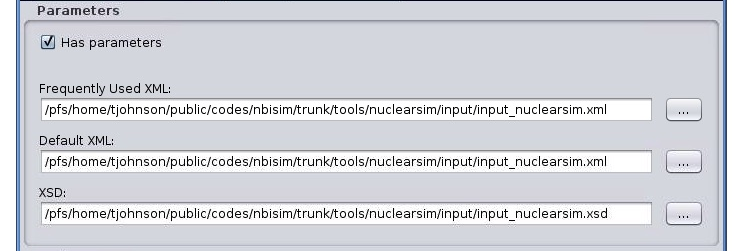

2.1.4.1.6. “Parameters” tab explained

Code specific parameters are all parameters which are specific to the code (like switches, scaling parameters, and parameters for built-in analytical models) as well as parameters to explicitly overrule fields in the ITM data structures.

Frequently Used XML - Actual value of the code parameters

Default XML - Default values of the code parameters

Schema - A (XSD) XML schema

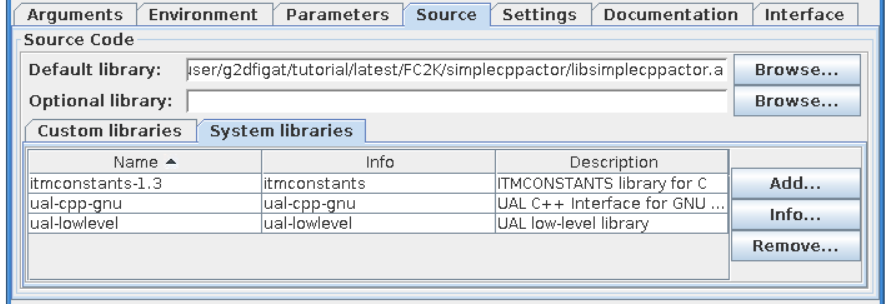

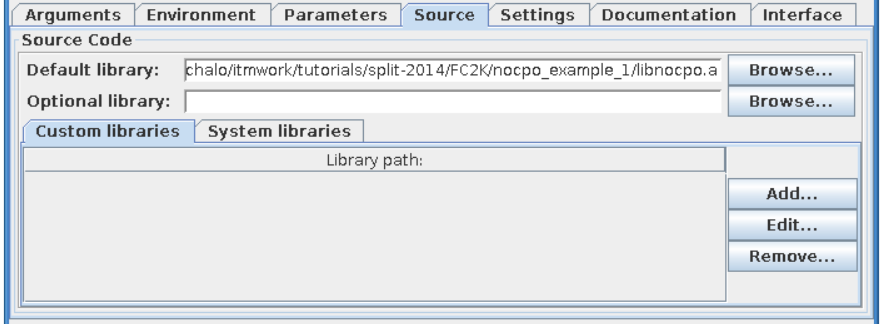

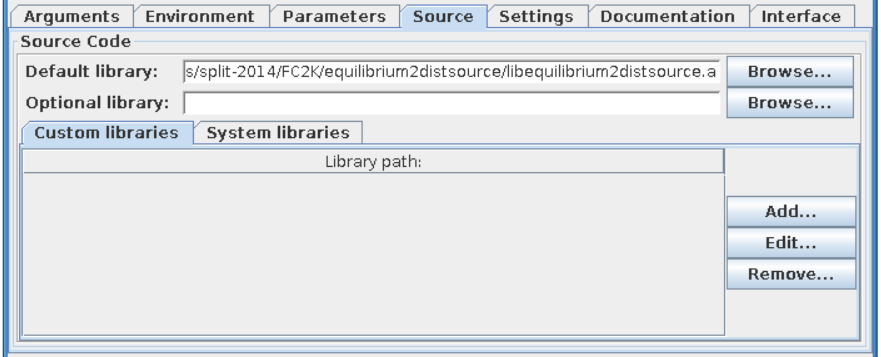

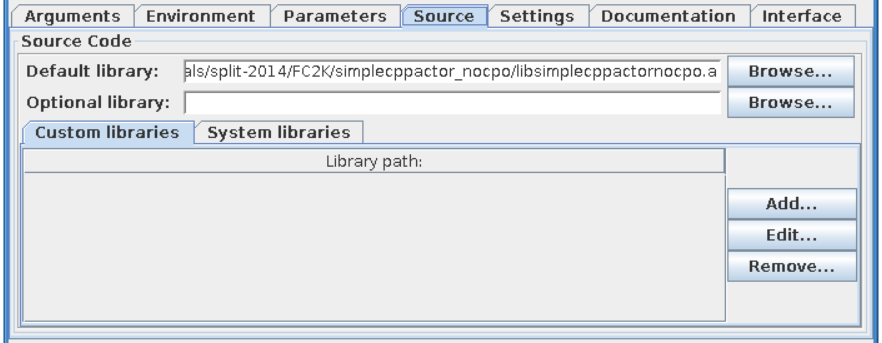

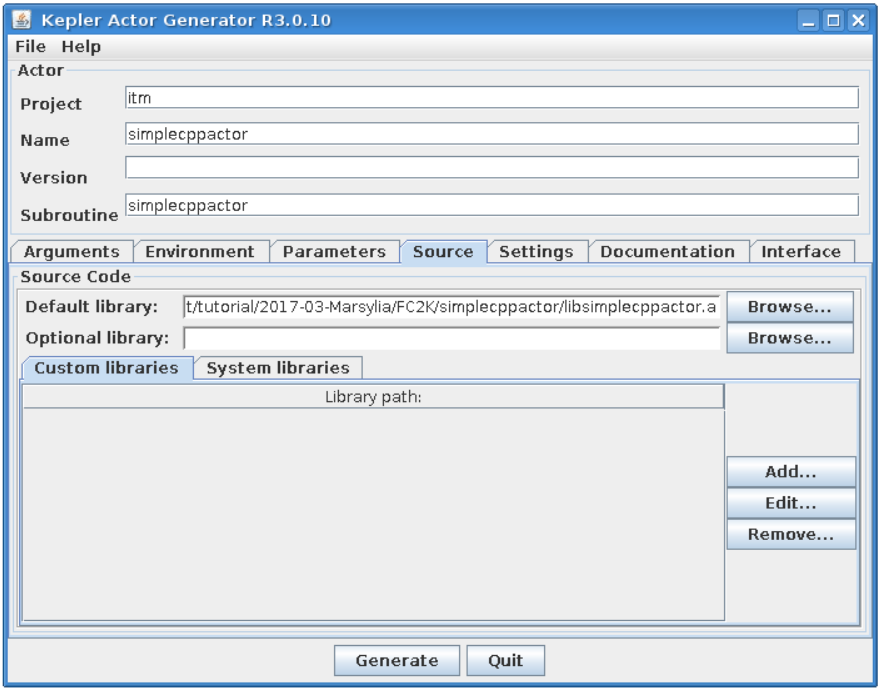

2.1.4.1.7. “Source” tab explained

The purpose of this tab is to define all code related issues:

a programming language

utilized compiler,

type of code execution (sequential of parallel)

libraries being used

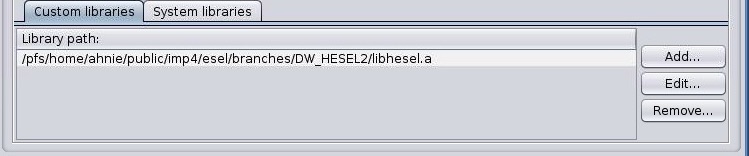

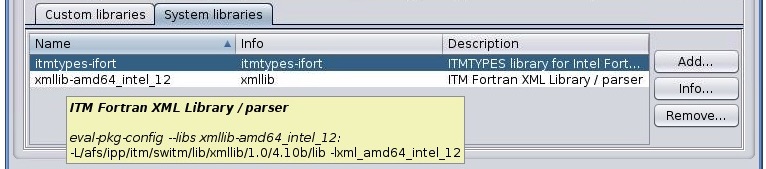

2.1.4.1.7.1. Libraries

“Main library”

A “Main library” field allows to define a path to library containing user subroutine/function.

“Optional library”

A “Optional library” field allows to define a path to optional library containing user subroutine/function.

“Custom libraries”

“Custom libraries” are non-standard static libraries required for building the user code.

Available operations on libraries list:

“Add…” - Adds a new library to the list

“Edit…” - Edits library path

“Remove” - Removes a new library from the list

“System libraries”

“System libraries” are system libraries handled by pkg-config mechanism and required for building the user code.

A user can:

add library from the list,

remove library

display detailed info (library definition returned by pkg-config mechanism)

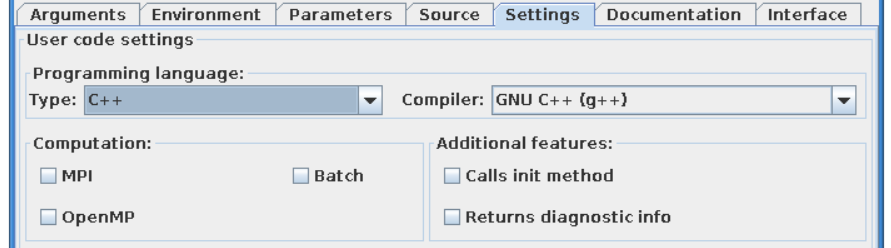

2.1.4.1.8. “Settings” tab explained

A user, using this tab, selects programming language of codes provided, compiler used to built library and type of code execution (sequential or parallel)

Programming languange:

Type - Defines programming language of user codes. It could be set to:

Fortran

_C/C++

Compiler - Defines compiler being used. Possible values:

ifort, gfortran

gcc, g++

Computation:

Parallel MPI - If turned ON uses MPI compilers (mpiifort for ifort, mpif90 for gfortran, mpigxx for C)

OPENMP - Defined if usage of OpenMP directives is turned ON/OFF

Batch - If turned ON, submits a user code to jobs queue (combined with Parallel MPI or OPENMP switch runs user code as parallel job)

Additional features:

Calls init method - If user function needs any pre-initialization, an additional function will be called.

Returns diagnostic info - adds output diagnostic information

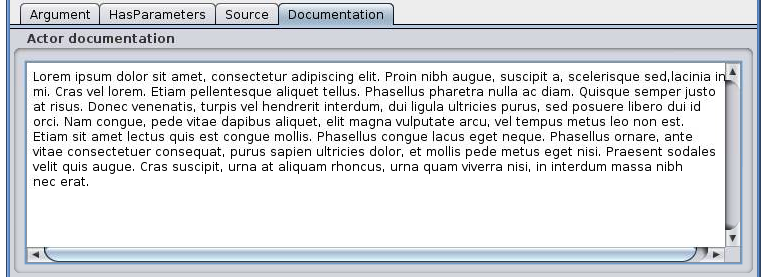

2.1.4.1.9. “Documentation” tab explained

The “Documentation” tab specifies an user-defined Kepler actor description. It could be displayed from actor pop-up menu.

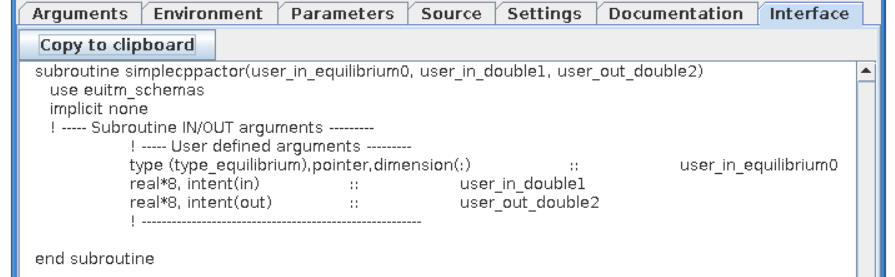

2.1.4.1.10. “Interface” tab explained

The “Interface” tab specifies interface for Kepler actor.

2.1.4.2. Incorporating user codes into Kepler using FC2K - exercises

In this part of the tutorial you will learn how to incorporate Fortran and C++ codes into Kepler.

Hands-on exercises show:

how to prepare C++ codes for FC2K

how to prepare C++ library

how set up Makefile

how start and configure FC2K tool

2.1.4.2.1. Embedding Fortran codes into Kepler

Simple Fortran code

In this exercise you will execute simple Fortran code (multiplying input value by two) within Kepler.

Fortran UAL example (CPO handling)

In this exercise you will create Kepler actor that uses UAL.

2.1.4.2.2. Embedding C++ codes

Simple C++ code

Simple C++ code that will be incorporated into Kepler via FC2K tool - addition of one to the value passed into input port of the actor

C++ code within Kepler (CPO)

In this exercise you will create Kepler actor that uses UAL.

2.1.5. FC2K - developer guidelines

2.1.5.1. What code wrapper actually does?

The code wrapper intermediates between Kepler actor and user code:

Passes variables of language built-in types (int, char, etc) from actor to the code

Reads CPO(s) from UAL and passes data to user code

Passes input code parameters (XML/XSD files) to user code

Calls user subroutine/function

Saves output CPO(s)

2.1.5.2. Development of Fortran codes

2.1.5.2.1. Subroutine syntax

subroutine name (<in/out arguments list> [,code_parameters] [,diagnostic_info])

name - subroutine name

in/out arguments list - a list of input and output subroutine arguments

diagnostic_info - arbitrary output diagnostic information

2.1.5.2.2. Arguments list

A mandatory position

A list of input and output subroutine arguments including:

Fortran intrisic data types, eg:

integer :: input

character(50) :: charstring

integer,dimension(4) :: tabint

CPOs, eg:

type (type_equilibrium),pointer :: equilibriumin(:)

type (type_distsource),pointer :: distsourceout(:)

2.1.5.2.3. Code parameters

user defined input parameters

input / optional

Argument of type: type_param

type type_param !

character(len=132), dimension(:), pointer ::parameters

character(len=132), dimension(:), pointer ::default_param

character(len=132), dimension(:), pointer ::schema

endtype

Derived type type_param describes:

parameters - Actual value of the code parameters (instance of coparam/parameters in XML format).

default_param - Default value of the code parameters (instance of coparam/parameters in XML format).

schema - Code parameters schema.

An example:

(type_param) :: codeparam{{

2.1.5.2.4. Diagnostic info

arbitrary output diagnostic information

output / optional

!---- Diagnostic info ---- integer, intent(out) :: user_out_outputFlag character(len=:), pointer, intent(out) :: user_out_diagnosticInfo

outputFlag - indicates if user subroutine was successfully executed

outpuflag = 0 - SUCCESS, no action is taken

outputFlag > 0 - WARNING, a warning message is displayed, workflow continuue execution

outputFlag < 0 - ERROR, actor throws an exception, workflow stops

diagnosticInfo - an arbitrary string

2.1.5.2.5. Examples

**Example 1 Simple in/out argument types**

subroutine nocpo(input, output)

integer, intent(in):: input

integer, intent(out):: output

**Example 2 A CPO array as a subroutine argument**

subroutine equil2dist(equilibriumin, distsourceout)

use euITM_schemas

implicit none

!input

type (type_equilibrium), pointer :: equilibriumin(:)

!output

type (type_distsource), pointer :: distsourceout(:)

**Example 3 Usage of code input parameters**

subroutine teststring(coreprof,equi,tabint,tabchar,codeparam)

use euITM_schemas

implicit none

!input

type(type_coreprof),pointer,dimension(:) :: coreprof

integer, dimension(4), intent(in) :: tabint

!output

type(type_equilibrium),pointer,dimension(:) :: equi

character(50), intent(out) :: tabchar

!code parameters

type(type_param), intent(in) :: codeparam

2.1.5.3. Development of C++ codes

2.1.5.3.1. Function syntax

void name ( <in/out arguments list> [,code_parameters] [,diagnostic_info] )

name - function name

code_parameters - optional - user defined input parameters

diagnostic_info - arbitrary output diagnostic information

2.1.5.3.2. Arguments list

in/out arguments list

mandatory

a list of input and output function arguments including:

CPP intrisic data types, eg:

int &x

double &y

CPOs, eg:

ItmNs::Itm::antennas & ant

ItmNs::Itm::equilibriumArray & eq

2.1.5.3.3. Code parameters

Optional

User defined input parameters

Argument of type: ItmNs:: codeparam_t &

typedef struct { char **parameters; char **default_param; char **schema; } codeparam_t;A structure codeparam_t describes:

parameters - Actual value of the code parameters (instance of coparam/parameters in XML format).

default_param - Default value of the code parameters (instance of coparam/parameters in XML format).

schema - Code parameters schema.

An example: ItmNs::codeparam_t & codeparam

2.1.5.3.4. Diagnostic info

arbitrary output diagnostic information

output / optional

void name(...., int* output_flag, char** diagnostic_info)

output_flag - indicates if user subroutine was successfully executed

output_flag = 0 - SUCCESS, no action is taken

output_flag > 0 - WARNING, a warning message is displayed, workflow continuue execution

output_flag < 0 - ERROR, actor throws an exception, workflow stops

diagnostic_info - an arbitrary string

2.1.5.3.5. Examples

**Example 4. Simple in/out argument types**

void simplecppactornocpo(double &x, double &y)

**Example 5. A CPO array as a function argument**

void simplecppactor(ItmNs::Itm::equilibriumArray &eq, double &x, double &y)

**Example 6. Usage of init function and code input parameters**

void mycppfunctionbis_init();

void mycppfunction(ItmNs::Itm::summary& sum, ItmNs::Itm::equilibriumArray& eq, int& x, ItmNs::Itm::coreimpur& cor, double& y, ItmNs::codeparam_t& codeparam)

2.1.5.4. Delivery of the user code

The user code should be delivered as a static library. Please find examples of the simple “makefiles” below:

**Example 6. Building of Fortran code**

F90 = $(ITM_INTEL_FC)

COPTS = -g -O0 -assume no2underscore -fPIC -shared-intel

INCLUDES = $(shell eval-pkg-config --cflags ual-$(ITM_INTEL_OBJECTCODE))

all: equilibrium2distsource.o libequilibrium2distsource

libequilibrium2distsource: equilibrium2distsource.o

ar -rvs libequilibrium2distsource.a equilibrium2distsource.o

equilibrium2distsource.o: equilibrium2distsource.f90

$(F90) $(COPTS) -c -o $@ $^ ${INCLUDES}

clean:

rm -f *.o *.a

**Example 7. Building of C++ code**

CXX=g++

CXXFLAGS= -g -fPIC

CXXINCLUDES= ${shell eval-pkg-config --cflags ual-cpp-gnu}

all: libsimplecppactor.a

libsimplecppactor.a: simplecppactor.o

ar -rvs $@ $^

simplecppactor.o: simplecppactor.cpp

$(CXX) $(CXXFLAGS) $(CXXINCLUDES) -c -o $@ $^

clean:

rm *.a *.o

2.1.6. FC2K - Example 1 - Embedding Fortran codes into Kepler (no CPOs)

The knowledge gained After this exercise you will:

know how to prepare Fortran codes for FC2K

know how to build Fortran library

know how set up Makefile

know how start and configure FC2K tool

In this exercise you will execute simple Fortran code within Kepler. In order to this follow the instructions:

2.1.6.1. Get familiar with codes that will be incorporated into Kepler

Go to Code Camp related materials within your home directory

shell> cd $TUTORIAL_DIR/FC2K/nocpo_example_1

You can find there various files. Pay particular attention to following ones:

nocpo.f90 - Fortran source code that will be executed from Kepler

Makefile - makefile that allows to build library file

nocpo_fc2k.xml - parameters for FC2K application (NOTE! this file contains my own settings, we will modify them during tutorial)

nocpo.xml - example workflow

2.1.6.2. Build the code by issuing

shell> make clean

shell> make

Codes are ready to be used within FC2K

2.1.6.3. Prepare environment for FC2K

Make sure that all required system settings are correctly set

shell> source $ITMSCRIPTDIR/ITMv1 kepler test 4.10b > /dev/null

2.1.6.4. Start FC2K application

This is as simple as typing fc2k from terminal

shell> fc2k

After a while, you should see FC2K’s main window.

2.1.6.5. Open a nocpo_example_1 project

Choose File -> Open and navigate to $TUTORIAL_DIR/FC2K/nocpo_example_1.

Open file nocpo_fc2k.xml.

You should see new parameter settings loaded into FC2K.

After loading parameters you can notice that parameters point to locations within your home directory.

2.1.6.6. Project settings

Please take a look at the project settings.

Subroutine arguments:

one input argument of type integer

one output argument of type integer

2.1.6.7. After all the settings are correct, you can generate actor

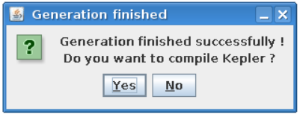

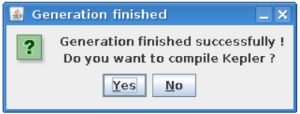

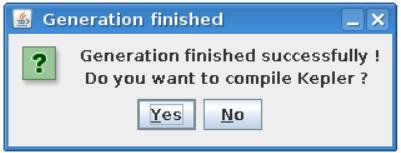

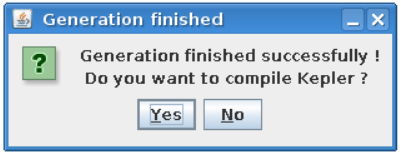

Simply press “Generate” button and wait till FC2K finishes the generation.

2.1.6.8. Confirm Kepler compilation

After actor is generated, FC2K offers to compile Kepler application. Make sure to compile it by pressing “Yes”.

2.1.6.9. You can now start Kepler and use generated actor

Open new terminal window and make sure that all environment settings are correctly set and execute Kepler.

shell> source $ITMSCRIPTDIR/ITMv1 kepler test 4.10b > /dev/null

shell> kepler.sh

After Kepler is started, open example workflow from the following location

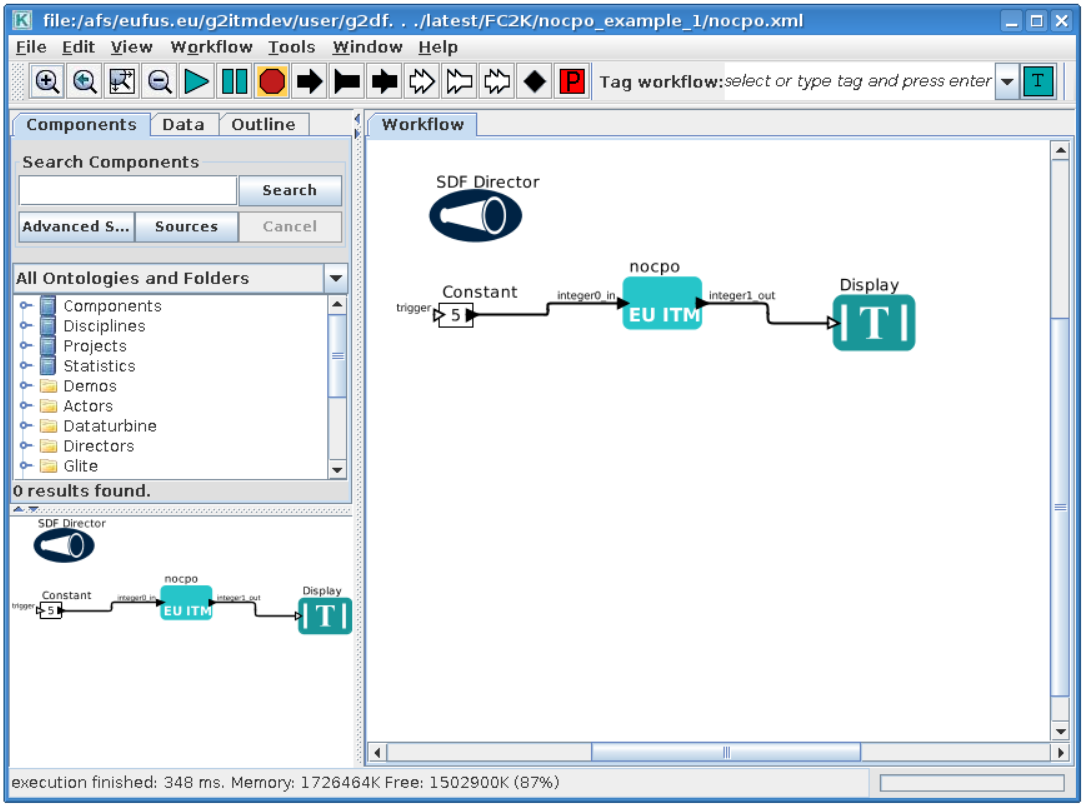

$TUTORIAL_DIR/FC2K/nocpo_example_1/nocpo.xml

You should see similar workflow on screen.

2.1.6.10. Launch the workflow

You can start the workflow execution, by pressing “Play” button

After workflow finishes it’s execution, you should see result similar to one below:

Exercise no. 1 finishes here.

2.1.7. FC2K - Example 2 - Embedding Fortran code into Kepler (CPOs)

Exercise no. 2.

Fortran example (CPO handling)

(approx. 30 min)

The knowledge gained

After this exercise you will:

know how to prepare Fortran codes that use UAL

know how to prepare Fortran based library that uses UAL

know how set up Makefile

know how start and configure FC2K tool

In this exercise you will execute simple Fortran code that uses UAL. Code will be incorporated into Kepler. In order to do this follow the instructions:

2.1.7.1. Get familiar with codes that will be incorporated into Kepler

Go to Code Camp related materials within your home directory

shell> cd $TUTORIAL_DIR/FC2K/equilibrium2distsource/

You can find there various files. Pay particular attention to following ones:

equilibrium2distsource.f90 - Fortran source code that will be executed fromKepler - this code uses UAL

Makefile - makefile that allows to build library file

cposlice2cposlicef_fc2k.xml - parameters for FC2K application (NOTE! this file contains my own settings, we will modify them during tutorial)

cposlice2cposlicef_kepler.xml - example workflow

2.1.7.2. Build the code

A Fortran example could be built by issuing

shell> make clean -f make_ifort

shell> make -f make_ifort

Codes are ready to be used within FC2K

2.1.7.3. Prepare environment for FC2K

Make sure that all required system settings are correctly set

shell> source $ITMSCRIPTDIR/ITMv1 kepler test 4.10b > /dev/null

2.1.7.4. Start FC2K application

This is as simple as typing fc2k from terminal

shell> fc2k

After a while, you should see FC2K’s main window

2.1.7.5. Open project cposlice2cposlicef_fc2k

Choose File -> Open

Navigate to $TUTORIAL_DIR/FC2K/equilibrium2distsource/.

Open file cposlice2cposlicef_fc2k.xml.

You should see new project loaded into FC2K.

2.1.7.6. Project settings

Please take a look at the project settings.

Subroutine arguments:

one input argument - CPO array

one output argument - CPO array

After loading parameters you can notice that library location points to location within your itmwork directory ($ITMWORK).

2.1.7.7. After all the settings are correct, you can generate actor

Simply press “Generate” button and wait till FC2K finishes the generation.

2.1.7.8. Confirm Kepler compilation

After actor is generated, FC2K offers to compile Kepler application. Make sure to compile it by pressing “Yes”.

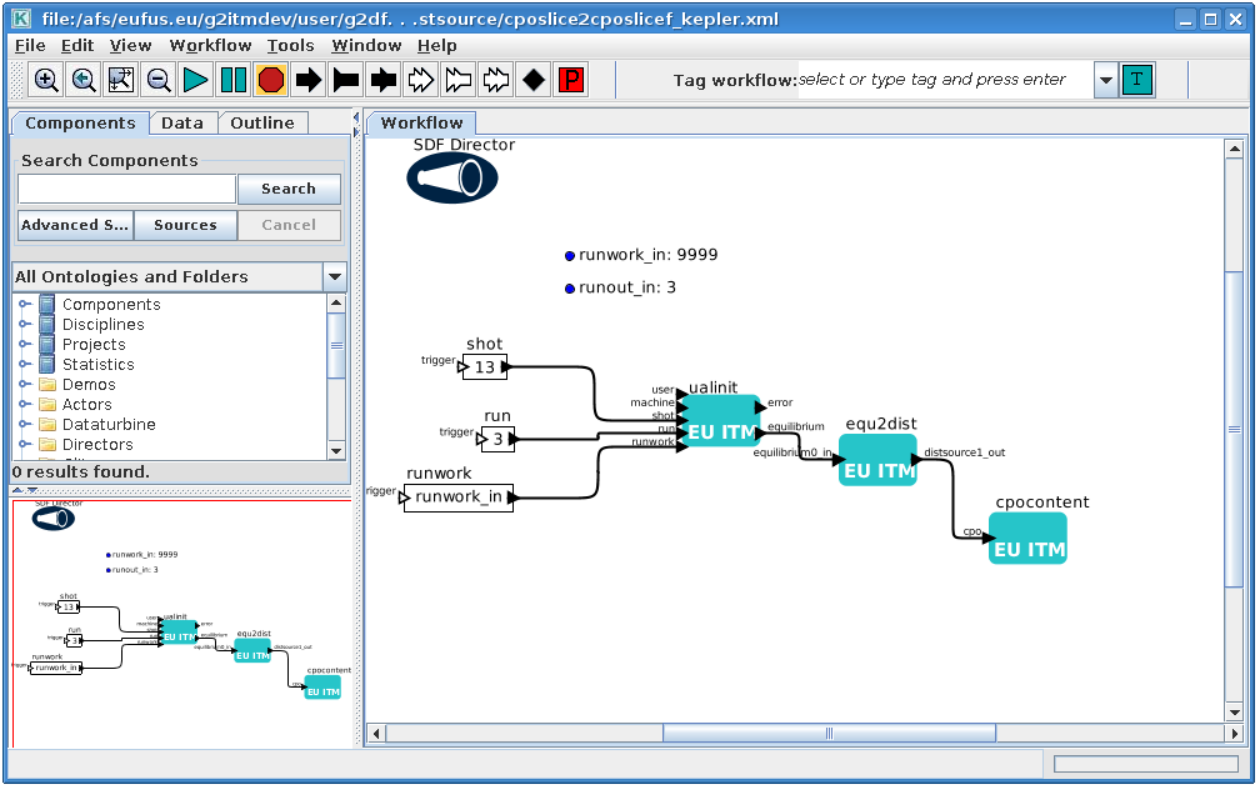

2.1.7.9. You can now start Kepler and use generated actor

Open new terminal window and make sure that all environment settings are correctly set and execute Kepler.

shell> source $ITMSCRIPTDIR/ITMv1 kepler test 4.10b > /dev/null

shell> kepler.sh

After Kepler is started, open example workflow from the following location

shell> $TUTORIAL_DIR/FC2K/equilibrium2distsource/cposlice2cposlicef_kepler.xml

You should see similar workflow on screen.

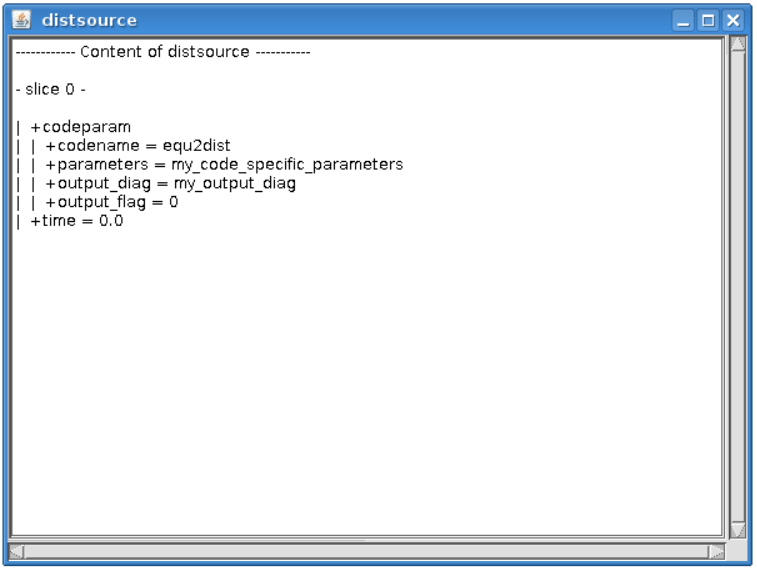

2.1.7.10. Launch the workflow

You can start the workflow execution, by pressing “Play” button

After workflow finishes it’s execution, you should see result similar to one below:

Exercise no. 2 finishes here.

2.1.8. FC2K - Example 3 - Embedding C++ code within Kepler (no CPOs)

Exercise no. 3

Embedding simple C++ code within Kepler (no CPOs)

(approx. 30 min)

The knowledge gained After this exercise you will:

know how to prepare C++ codes for FC2K

know how to prepare C++ library

know how set up Makefile

know how start and configure FC2K tool

In this exercise you will execute simple C++ code within Kepler. In order to do this follow the instructions:

2.1.8.1. Get familiar with codes that will be incorporated into Kepler

Go to Code Camp related materials within your home directory

cd $TUTORIAL_DIR/FC2K/simplecppactor_nocpo

You can find there various files. Pay particular attention to following ones:

simplecppactornocpo.cpp - C++ source code that will be executed from Kepler

Makefile - makefile that allows to build library file

simplecppactor_nocpo_fc2k.xml - parameters for FC2K application (NOTE! this file contains my own settings, we will modify them during tutorial)

simplecppactor_nocpo_workflow.xml - example workflow

2.1.8.2. Build the code by issuing

shell> make clean

shell> make

Codes are ready to be used within FC2K

2.1.8.3. Prepare environment for FC2K

Make sure that all required system settings are correctly set

shell> source $ITMSCRIPTDIR/ITMv1 kepler test 4.10b > /dev/null

2.1.8.4. Start FC2K application

This is as simple as typing fc2k from terminal

shell> fc2k

After a while, you should see FC2K’s main window

2.1.8.5. Open project simplecppactor_nocpo

Choose File -> Open

Navigate to $TUTORIAL_DIR/FC2K/simplecppactor_nocpo

Open file simplecppactor_nocpo_fc2k.xml.

You should see new project loaded into FC2K.

2.1.8.6. Project settings

Please take a look at the project settings.

Function arguments:

one input argument - double

one output argument - double

After loading parameters you can notice that library location points to location within your $TUTORIAL_DIR directory.

2.1.8.7. Actor generation

After all the settings are correct, you can generate actor

Simply press “Generate” button and wait till FC2K finishes the generation.

2.1.8.8. Confirm Kepler compilation

After actor is generated, FC2K offers to compile Kepler application. Make sure to compile it by pressing “Yes”.

2.1.8.9. You can now start Kepler and use generated actor

Open new terminal window and make sure that all environment settings are correctly set and execute Kepler.

shell> source $ITMSCRIPTDIR/ITMv1 kepler test 4.10b > /dev/null

shell> kepler

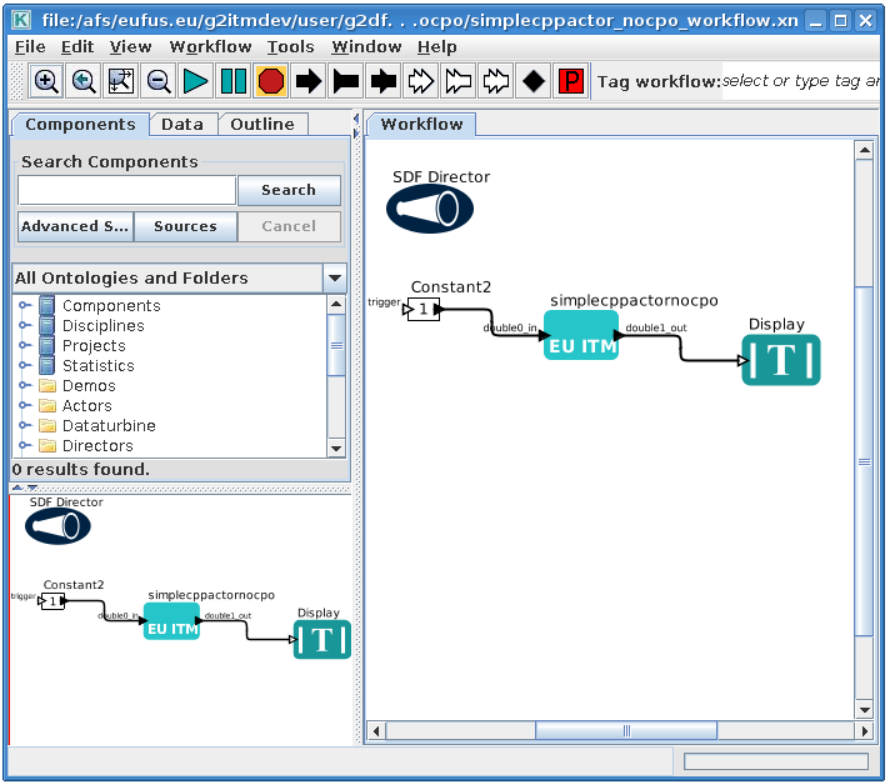

After Kepler is started, open example workflow from the following location

$TUTORIAL_DIR/FC2K/simplecppactor_nocpo/simplecppactor_nocpo_workflow.xml

You should see similar workflow on screen.

2.1.8.10. Launch the workflow

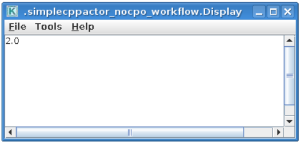

You can start the workflow, by pressing “Play” button

After workflow finishes it’s execution, you should see result similar to one below:

Exercise no. 3 finishes here.

2.1.9. FC2K - Example 4 - Embedding C++ code within Kepler (CPOs)

Exercise no. 4

C++ code within Kepler (CPO handling)

(approx. 30 min)

The knowledge gained: After this exercise you will: - know how to prepare C++ codes for FC2K - know how to prepare C++ library - know how set up Makefile - know how start and configure FC2K tool In this exercise you will execute simple C++ code within Kepler. In order to do this follow the instructions:

2.1.9.1. Get familiar with codes that will be incorporated into Kepler

Go to Code Camp related materials within your home directory

shell> cd $TUTORIAL_DIR/FC2K/simplecppactor

You can find there various files. Pay particular attention to following ones:

simplecppactor.cpp - C++ source code that will be executed from Kepler

Makefile - makefile that allows to build library file

simplecppactor_fc2k.xml - parameters for FC2K application (NOTE! this file contains my own settings, we will modify them during tutorial)

simplecppactor_workflow.xml - example workflow

2.1.9.2. Build the code by issuing

shell> make clean

shell> make

Codes are ready to be used within FC2K

2.1.9.3. Prepare environment for FC2K

Make sure that all required system settings are correctly set

shell> source $ITMSCRIPTDIR/ITMv1 kepler test 4.10b > /dev/null

2.1.9.4. Start FC2K application

This is as simple as typing fc2k from terminal

shell> fc2k

After a while, you should see FC2K’s main window.

2.1.9.5. Open project simplecppactor

Choose File -> Open

Navigate to $TUTORIAL_DIR/FC2K/simplecppactor.

Open file simplecppactor_fc2k.xml.

You should see new parameter settings loaded into FC2K.

2.1.9.6. Project settings

Please take a look at the project settings.

Function arguments:

input argument - equilibrium

input argument - double

output argument - double

You should modify these setting, so they point to locations within you home directory. They will typically be as follows:

2.1.9.7. Actor generation

After all the settings are correct, you can generate actor Simply press “Generate” button and wait till FC2K finishes the generation.

2.1.9.8. Confirm Kepler compilation

After actor is generated, FC2K offers to compile Kepler application. Make sure to compile it by pressing “Yes”.

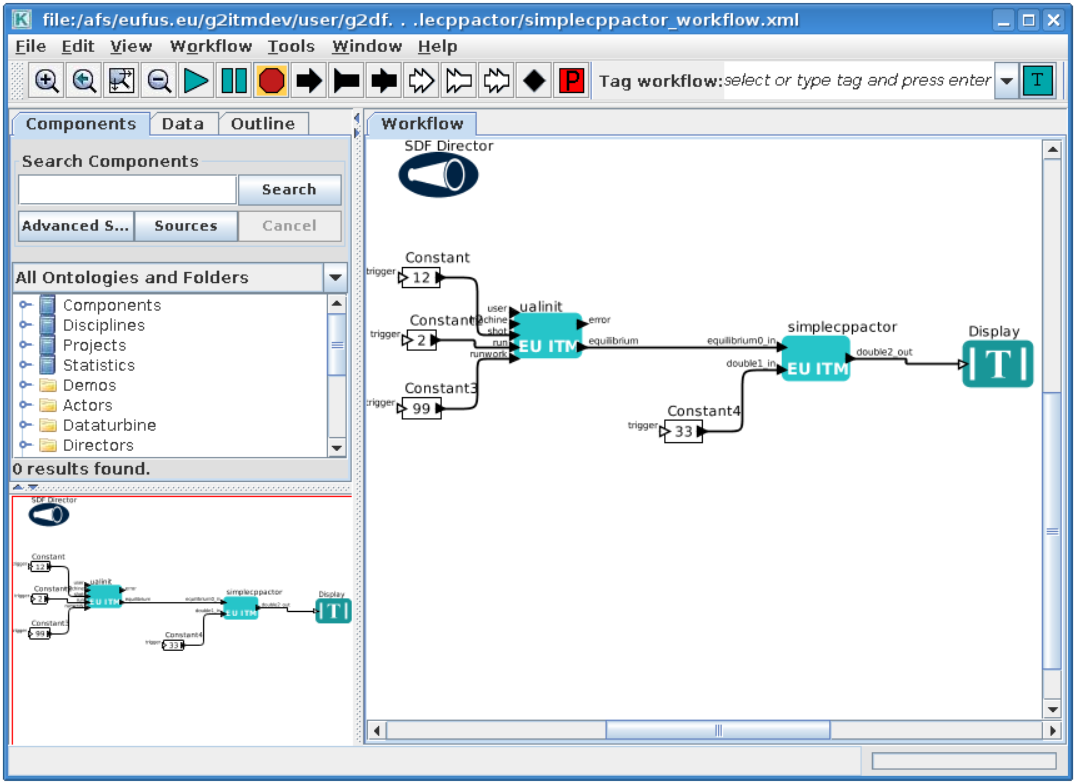

2.1.9.9. You can now start Kepler and use generated actor

Open new terminal window and make sure that all environment settings are correctly set and execute Kepler.

shell> source $ITMSCRIPTDIR/ITMv1 kepler test 4.10b > /dev/null

kepler.sh

After Kepler is started, open example workflow from the following location

shell> $TUTORIAL_DIR/FC2K/simplecppactor/simplecppactor_workflow.xml

You should see similar workflow on screen.

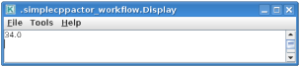

Launch the workflow

You can start the workflow, by pressing “Play” button

After workflow finishes it’s execution, you should see result similar to one below:

Exercise no. 4 finishes here.

2.1.10. IMAS Kepler 2.1.3 (default release)

2.1.10.1. Installation of default version of Kepler (without actors)

In order to use most recent version of Kepler do following. First of all make sure you have directory imas-kepler inside your $HOME

in case you already have imas-kepler inside $HOME

you can move it to $ITMWORK/imas-kepler

> mv $HOME/imas-kepler $ITMWORK/imas-kepler

If you don't have $HOME/imas-kepler directory, create

it inside $ITMWORK

> mkdir $ITMWORK/imas-kepler

create symbolic link inside $HOME

> cd $HOME

> ln -s $ITMWORK/imas-kepler

Then, you can load imasenv module by calling

> module load imasenv

If there is no Kepler version installed, you will be informed by message

WARNING: Cannot find /afs/eufus.eu/user/..../imas-kepler/2.5p2-2.1.3... Run kepler_install_light before running kepler;

INFO: setting KEPLER=/gw/swimas/extra/kepler/2.5p2-2.1.3;

IMAS environment loaded.

Please do not forget to set database by calling 'imasdb <machine_name>' !

In that case, call kepler_install_light - you will see installation process running in your terminal.

> kepler_install_light

Warning: $KEPLER_INSTALL_PATH override by environment: /afs/eufus.eu/user/g/g2michal/imas-kepler/2.5p2-2.1.3

mkdir: created directory ?/afs/eufus.eu/g2itmdev/user/g2michal/imas-kepler/2.5p2-2.1.3?

sending incremental file list

.ptolemy-compiled

build-area/

build-area/README.txt

build-area/build.xml

build-area/current-suite.txt

...

...

...

?gui? -> ?gui-2.5?

?common? -> ?common-2.5?

Done installing /afs/eufus.eu/g2itmdev/user/g2michal/imas-kepler/2.5p2-2.1.3.

Run `module switch kepler/2.5p2-2.1.3` to update $KEPLER to match.

Then run `kepler` to try your lightweight installation.

You have to switch module, to make sure that KEPLER variable points to proper location.

> module switch kepler/2.5p2-2.1.3

Once you have set version of Kepler, you can run it by typing kepler

> kepler

The base dir is /afs/eufus.eu/g2itmdev/user/g2michal/imas-kepler/2.5p2-2.1.3

Kepler.run going to run.setMain(org.kepler.Kepler)

JVM Memory: min = 1G, max = 8G, stack = 20m, maxPermGen = default

adding $CLASSPATH to RunClassPath: /gw/switm/jaxfront/R1.0/XMLParamForm.jar:/gw/switm/jaxfront/R1.0/jaxfront-core.jar:/gw/switm/jaxfront/R1.0/jaxfront-swing.jar:/gw/switm/jaxfront/R1.0/xercesImpl.jar:/gw/swimas/core/imas/3.20.0/ual/3.8.3/jar/imas.jar

...

...

2.1.10.2. Installation of “dressed” version of Kepler (with actors)

In order to use most recent version of Kepler (with actors) do following. First of all make sure you have directory imas-kepler inside your $HOME

> mkdir $HOME/imas-kepler

Then, you can load imasenv module by calling

> module load imasenv

If there is no Kepler version installed, you will be informed by message

WARNING: Cannot find /afs/eufus.eu/user/..../imas-kepler/2.5p2-2.1.3... Run kepler_install_light before running kepler;

INFO: setting KEPLER=/gw/swimas/extra/kepler/2.5p2-2.1.3;

IMAS environment loaded.

Please do not forget to set database by calling 'imasdb <machine_name>' !

You have to switch to “dressed” version of Kepler by calling

> module switch kepler/2.5p2-2.1.3_IMAS_3.20.0

> kepler_install_light

Warning: $KEPLER_INSTALL_PATH override by environment: /afs/eufus.eu/user/g/g2michal/imas-kepler/2.5p2-2.1.3_IMAS_3.20.0

mkdir: created directory ?/afs/eufus.eu/g2itmdev/user/g2michal/imas-kepler?

mkdir: created directory ?/afs/eufus.eu/g2itmdev/user/g2michal/imas-kepler/2.5p2-2.1.3_IMAS_3.20.0?

...

...

Done installing /afs/eufus.eu/g2itmdev/user/g2michal/imas-kepler/2.5p2-2.1.3_IMAS_3.20.0.

Run `module switch kepler/2.5p2-2.1.3_IMAS_3.20.0` to update $KEPLER to match.

Then run `kepler` to try your lightweight installation.

You have to switch module, to make sure that KEPLER variable points to proper location.

> module switch kepler/2.5p2-2.1.3_IMAS_3.20.0

Once you have set version of Kepler, you can run it by typing kepler

> kepler

The base dir is /afs/eufus.eu/g2itmdev/user/g2michal/imas-kepler/2.5p2-2.1.3_IMAS_3.20.0

Kepler.run going to run.setMain(org.kepler.Kepler)

JVM Memory: min = 1G, max = 8G, stack = 20m, maxPermGen = default

...

...

2.1.11. IMAS Kepler 2.1.5 (release candidate)

Most recent steps for Gateway users

In order to use most recent version of Kepler do following. First of all make sure you have directory imas-kepler inside your $HOME

> mkdir -p $HOME/imas-kepler/modulefiles

Make sure to set IMAS_KEPLER_DIR variable inside .cshrc file

> echo "setenv IMAS_KEPLER_DIR $HOME/imas-kepler" >> ~/.cshrc

Now, you can load imasenv/3.21.0 module by calling

> module load imasenv/3.21.0

Note that this module uses kepler/2.5p2-2.1.5 instead of kepler/2.5p2-2.1.3

> module load imasenv/3.21.0

IMAS environment loaded.

Please do not forget to set database by calling 'imasdb <machine_name>' !

Now, you can install your personal Kepler installation (please note that since release 2.5p-2.1.5 and keplertools-1.7.0 it is possible to switch between different installations of Kepler (they will not collide).

> kepler_install my_own_kepler

Using IMAS_KEPLER_DIR at: /pfs/work/g2michal/imas-keplers.

Using KEPLER_SRC from KEPLER: /gw/swimas/extra/kepler/2.5p2-2.1.5.

mkdir: created directory ?/pfs/work/g2michal/imas-keplers/my_own_kepler

mkdir: created directory ?/pfs/work/g2michal/imas-keplers/my_own_kepler/.kepler?

mkdir: created directory ?/pfs/work/g2michal/imas-keplers/my_own_kepler/.ptolemyII?

mkdir: created directory ?/pfs/work/g2michal/imas-keplers/my_own_kepler/KeplerData?

Done installing /pfs/work/g2michal/imas-keplers/my_own_kepler/kepler.

?/gw/swimas/extra/keplertools/1.7.0/share/modulefiles/kepler? -> ?/pfs/work/g2michal/imas-keplers/modulefiles/kepler/my_own_kepler?

Kepler was installed inside /pfs/work/g2michal/imas-keplers/my_own_kepler

Its module file is: /pfs/work/g2michal/imas-keplers/modulefiles/kepler/my_own_kepler

To load this environment, run: module switch kepler/my_own_kepler

To see available installations: module avail kepler

As you can see, your personal Kepler installations are available via modules. In order to switch to given version of Kepler you need to switch the module

> module switch kepler/my_own_kepler

Once you have set version of Kepler, you can run it by typing kepler

> kepler

kepler

The base dir is /marconi_work/eufus_gw/work/g2michal/imas-keplers/my_own_kepler/kepler

Kepler.run going to run.setMain(org.kepler.Kepler)

JVM Memory: min = 1G, max = 8G, stack = 20m, maxPermGen = default

...

...

2.1.12. Installation based on README file

Installation instructions based on most recent version of IMAS Kepler

Detailed, up to date, instructions on how to install and switch between different installations of Kepler, can be found here

> git clone ssh://git@git.iter.org/imex/kepler-installer.git

> cat kepler-installer/README

You can also find latest documentation at following location (Gateway)

> cat $SWIMASDIR/extra/kepler-installer/README

2.2. General Grid Description and Grid Service Library

2.2.1. Resources

GForge project page

Linking to library: general , specific

A tutorial talk. Note: some slides might be out of date, please refer to the documentation.

2.2.2. Documentation

4.09a Resources: Sources, Fortran Examples

Documentation:

Release v1.2: Fortran 90 , Python , ualconnector ,

4.10a Resources: Sources, Fortran Examples

Documentation:

Release v1.2: Fortran 90 , Python , ualconnector ,

2.2.3. Outdated documentation

This section collects information and documentation related to the general grid description.

Some presentations:

A tutorial talk from 2011 ,

General Meeting 2011: Short overview talk and detailed presentation

Instructions how to get a copy of the Grid Service Library

Documentation for the EU-IM Grid Service Library: Fortran 90 , Python

A short manual for ualconnector and VisIt

Some examples are included in the Grid Service Library distribution.

2.2.3.1. Example grids

2.2.3.1.1. Example grid details

This section describes a number of example grids and gives some examples for specific constructs (object lists, subgrids).

2.2.3.1.1.1. Example Grid #1: 2d structured R,Z grid

Note: the grids shown here are used in the unit tests of the grid service library implementation, i.e. the automated testing framework.

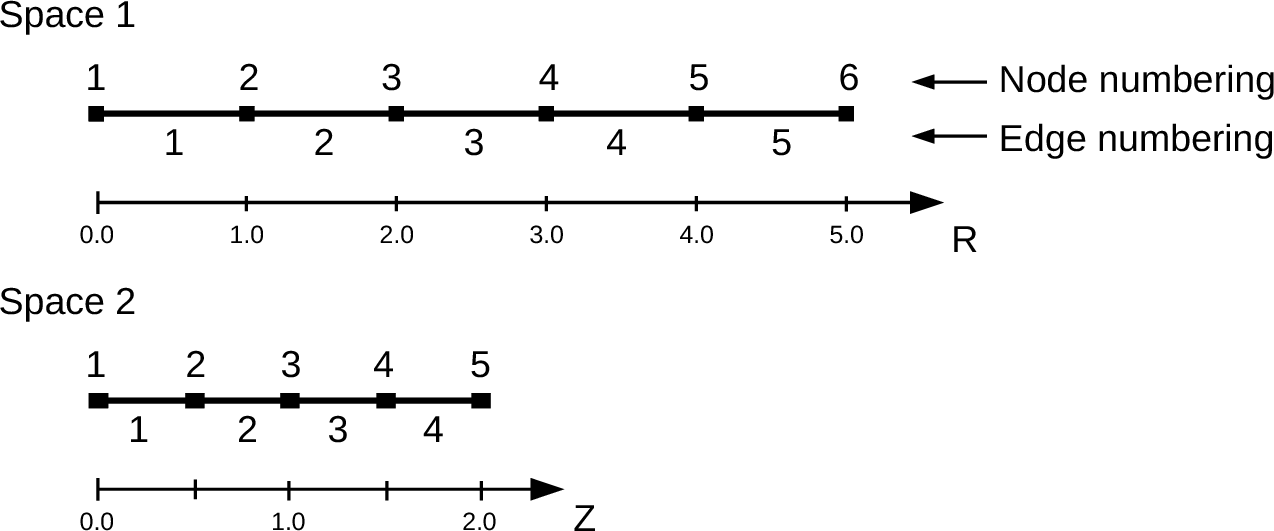

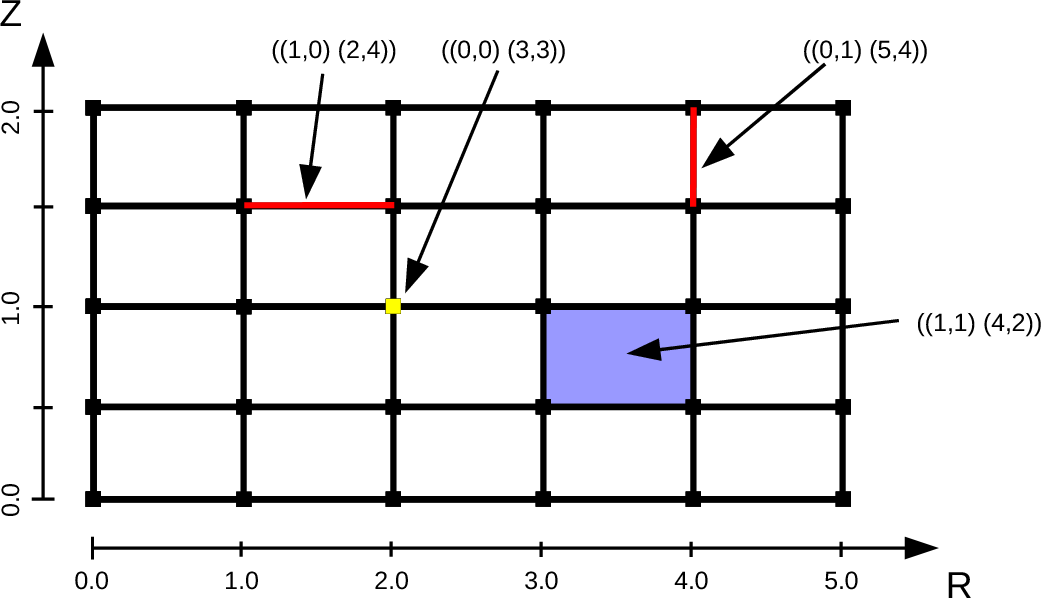

A 2d grid in (R,Z) constructed by combining two structured one-dimensional spaces. The spaces are defined as follows, they define nodes and edges as subobjects.

The whole grid then looks like this (attention, slightly differing scales in R and Z):

A couple of examples for object descriptor are given. Some explanations:

((1,1) (4,2)) = a 2d object (2d cell or face), implicitly created by combining the 1d object (edge) no. 4 from space 1 and the 1d object no. 2 from space 2. ((1,0) (2,4)) = a 1d object (edge), implicitly created by combining 1d object (edge) from space 1 with the 0d object (node) no. 4 from space 2. ((0,0) (2,2)) = a 0d object (node), implicitly created by combining 0d objects (nodes) no. 2 from space 1 and no. 2 from space 2.

2.2.3.1.1.2. Object classes

This section shows the different object classes present in the grid. The implicit numbering of the objects in a class is obtained by iterating over all subobjects defining the objects, lowest space first.

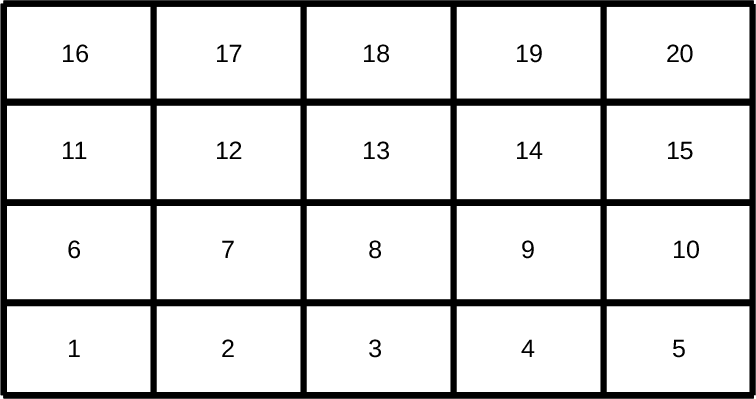

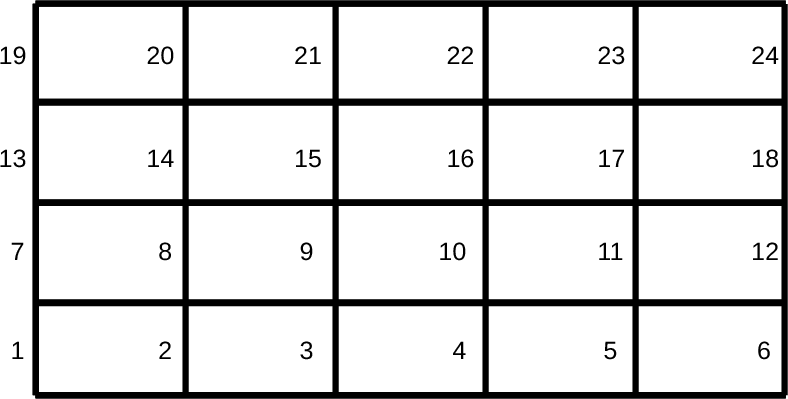

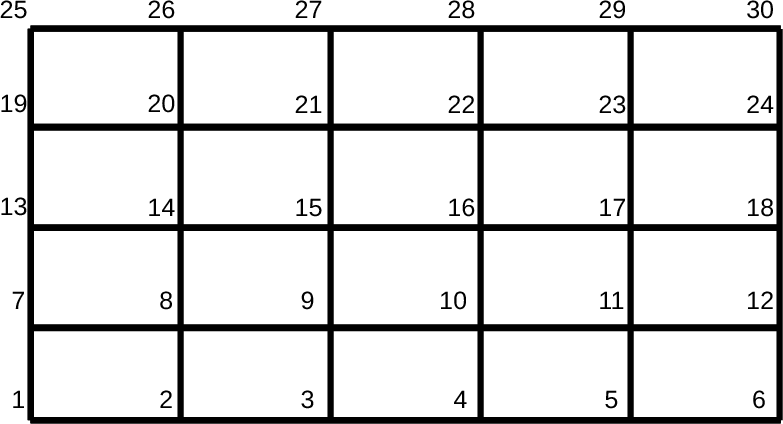

Object class (1,1): 2d cells/faces. They have the following implicit numbering:

Object class (1,0): 1d edges, aligned along the R axis (“r-aligned”). They have the following implicit numbering:

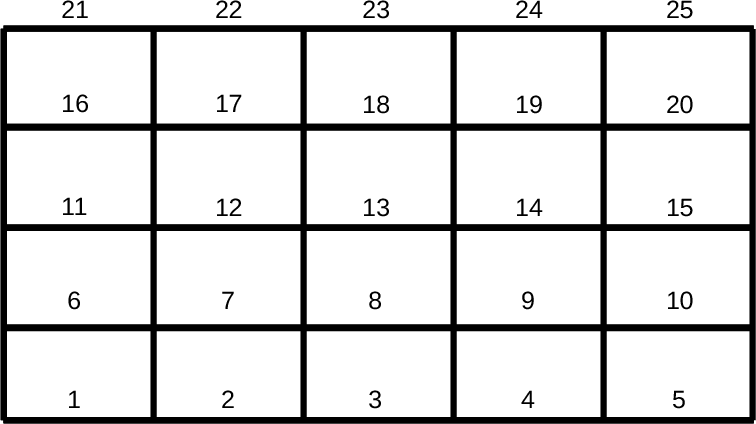

Object class (0,1): 1d edges, aligned along the Z axis (“z-aligned”). They have the following implicit numbering:

Object class (0,0): 0d nodes. They have the following implicit numbering:

2.2.3.1.1.3. Example 2: B2 grid

2.2.3.1.2. Object list examples

Some examples for object lists, to explain the concept and show the notation. All examples refer to the 2d structured R,Z example grid #1 given above. Object descriptor A single object (= and object descriptor), for object with object class (1,1), object index (4,2).

((1,1) (4,2))

Explicit object lists An explicit object list is simply an enumeration of object descriptors. The ordering of the objects is given directly by their position in the list. Note that by definition, all objects in the list must be of the same class (An implementation of an explicit object list should enforce this. If you need lists of objects with differing class, have a look at subgrids).

An explicit list of 2d cells (faces), listing the four corner cells of the grid in the order bottom-left, bottom-right, top-left, top-right:

(((1,1) (1,1)),

((1,1) (5,1)),

((1,1) (1,4)),

((1,1) (5,4)))

Implicit object lists Implicit object lists use the implicit order of (sub)objects to form an efficient representation of (possibly large) sets of objects. They thus avoid explicit enumeration of individual objects as done in the explicit objects lists. The following examples demonstrate the implicit list notation. Note: the implicit list notation is used in the Python implementation of the grid service library in exactly the form given here.

Selecting all indices An implicit object list of all r-aligned edges:

((1,0) (0,0))

Object and subobject indices in the grid description start counting from 1, i.e. object no. 1 is the first object. The index 0 is special and denotes an undefined index. In this notation, it denotes all possible indices.

An implicit object list of the (z-aligned) boundary edges on the left boundary of the grid:

((0,1) (1,0))

The first entry of the index tuple denotes the first node in the r-space, the second entry denotes all edges in the z space. The implicit list denotes a total of 4 1d edges. Their implicit numbering is again given by iterating over all defining objects, lowest space first. The list therefore expands to

((0,1) (1,1))

((0,1) (1,2))

((0,1) (1,3))

((0,1) (1,4))

Selecting explicit lists of indices An implicit object list of the (z-aligned) right and left boundary edges:

((0,1) ([1,6],0))

The first entry of the index tuple denotes a list of nodes in the r-space, more specifically the first and the last (=6th) node. The second entry denotes again all edges in the z space. The implicit list then denotes a total of 8 1d edges in the following order:

((0,1) (1,1))

((0,1) (6,1))

((0,1) (1,2))

((0,1) (6,2))

((0,1) (1,3))

((0,1) (6,3))

((0,1) (1,4))

((0,1) (6,4))

Selecting ranges of indices An implicit object list of all 2d cells, except the cells on the left and right boundary.

((1,1) ((2,4),0))

The first entry of the index tuple denotes a range of edges in the r-space, more specifically the edges 2 to 4. The second entry of the index tuple denotes all four edges in the z-space. The implicit list then denotes a total of 12 2d cells in the following order:

((1,1) (2,1))

((1,1) (3,1))

((1,1) (4,1))

((1,1) (2,2))

((1,1) (3,2))

((1,1) (4,2))

((1,1) (2,3))

((1,1) (3,3))

((1,1) (4,3))

((1,1) (2,4))

((1,1) (3,4))

((1,1) (4,4))

All implementations of the grid service library define the constant GRID_UNDEFINED=0 to specify an undefined index. Use of GRID_UNDEFINED instead of 0 is advised to increase the readability of the code. The following notations are therefore equivalent ((1,0) (0,0)) = ((1,0) (GRID_UNDEFINED,GRID_UNDEFINED)) ((0,1) (1,0)) = ((0,1) (1,GRID_UNDEFINED))

2.2.3.1.3. Subgrid examples

A subgrid is an ordered list of grid objects of a common dimension. The difference to object lists is that they can contain objects of different object classes.

The subgrid concept is central to storing data on grids. To store data, first a subgrid has to be defined. The objects in the grid have a fixed order, which then allows to unambiguously store the data associated with the objects in vectors.

Technically, a subgrid is an ordered list of object lists, of which every individual list is either explicit or implicit. The ordering of the objects in the subgrid is then directly given by the ordering of the object lists and the ordering of the grid objects therein.

Subgrid example The following subgrid consists of all boundary edges of the 2d R,Z example grid #1, given as four implicit object lists.

((1,0) (0,1)) ! bottom edges

((0,1) (6,0)) ! right edges

((1,0) (0,5)) ! top edges

((0,1) (1,0)) ! left edges

Explicitly listing the objects in the order given by the subgrid gives:

1: ((1,0) (1,1)) ! bottom edges

2: ((1,0) (2,1))

3: ((1,0) (3,1))

4: ((1,0) (4,1))

5: ((1,0) (5,1))

6: ((0,1) (6,1)) ! right edges

7: ((0,1) (6,2))

8: ((0,1) (6,3))

9: ((0,1) (6,4))

10: ((1,0) (1,5)) ! top edges

11: ((1,0) (2,5))

12: ((1,0) (3,5))

13: ((1,0) (4,5))

14: ((1,0) (5,5))

15: ((0,1) (1,1)) ! left edges

16: ((0,1) (1,2))

17: ((0,1) (1,3))

18: ((0,1) (1,4))

The number at the beginning of each line is the local index of the object, where local means locally in the subgrid. Note that, again, counting starts at 1.

2.2.3.2. Grid service library

2.2.3.2.1. Using the grid service library

2.2.3.2.1.1. Setting up the environment

The grid service library requires the EU-IM data structure version 4.09a (or later). Before using it you have to make sure your environment is set up properly. The following section assumes you are using csh or tcsh on the Gateway.

First, your environment variables have to be set up properly. To check them do

echo $TOKAMAKNAME

It should return

test

Also do

echo $DATAVERSION

It should return

4.09a

(or some higher version number). If either of them returns something different, run

source $EU-IMSCRIPTDIR/EU-IMv1 kepler test 4.09a > /dev/null

and check the variables again.

Second, you have to ensure your data tree is set up properly. Do

ls ~/public/itmdb/itm_trees/$TOKAMAKNAME/$DATAVERSION/mdsplus/0/

If you get something like “No such file or directory”, you have to set up the tree first by running

$EU-IMSCRIPTDIR/create_user_itm_dir $TOKAMAKNAME $DATAVERSION

and then do the previous check again.

2.2.3.2.1.2. Checking out and testing the grid service library

To be able to get the code of the grid service library, you have to be a member of the EU-IM General Grid description (itmggd) project (you can apply for this here).

Once you are a member, you can check out the code by

svn co https://gforge-next.eufus.eu/svn/itmggd itm-grid

Then you can run the unit tests for the grid service library by

cd itm-grid

source setup.csh

This will setup environment variables (especially OBJECTCODE) and aliases. Then do

testgrid setup

This will set up the build system for the individual languages. It will also build and execute a Fortran program that writes a simple 2d example grid stored in an edge CPO into shot 1, run 1.

To actually run the tests do

testgrid all

This will go through the implementations in the different languages (F90, Python, …) and run unit tests for every on of them. If all goes well, it should end with the message

Test all implementations: OK

If this is not the case, something is broken and must be fixed.

2.2.3.2.2. Example applications (outdated)

Note: this is a bit outdated. Have a look here.

2.2.3.2.2.1. Plotting 3d wall geometry with VisIt (temporary solution, not required any more)

This example plots a 3d wall representation stored in the edge CPO (in the future, this information will be stored in the wall CPO). The example data used here is generated by a preprocessing tool which is part of the ASCOT code.

Check out the grid service library (See above. You don’t necessarily have to run the tests)

Change to the python/ directory and setup the environment:

cd itm-grid/python/; source setup.csh

Edit the file itm/examples/write_xdmf.py to use the right shot number

Run it (still in the python/ directory of the service library) with

python26 itm/examples/write_xdmf.py

This will create two files: wall.xmf and wall.h5

Start visit with

visit23

and open the wall.xmf file. Then select Plot->Mesh->Triangle and

click on the "Draw" button.

2.2.3.2.2.2. Using UALConnector to visualize CPOs using the general grid description

UALConnector allows you to bring data directly from the UAL into VisIt.

Check out the grid service library (See above. You don’t necessarily have to run the tests)

Run UALConnector. Examples:

./itm-grid/ualconnector -s 9001,1,1.0 -c edge -u klingshi -t test -v 4.09a

./itm-grid/ualconnector -s 15,1,1.0 -c edge -u klingshi -t test -v 4.09a

When finished, close VisIt and terminate the UALConnector by typing ‘quit’.

You don’t even have to check out the service library. UALConnector is made available at

~klingshi/bin/itm-grid/ualconnector

, i.e.

~klingshi/bin/itm-grid/ualconnector -s 9001,1,1.0 -c edge -u klingshi -t test -v 4.09a

~klingshi/bin/itm-grid/ualconnector -s 15,1,1.0 -c edge -u klingshi -t test -v 4.09a

2.2.3.3. IMP3 General Grid Description and Grid Service Library - Tutorial

2.2.3.3.1. Setup your environment

echo $DATAVERSION

echo $TOKAMAKNAME

should give “4.09a” and “test”. If not, run

source $EU-IMSCRIPTDIR/EU-IMv1 kepler test 4.09a > /dev/null

To copy the tutorial files:

cp -r ~klingshi/bin/itm-grid ~/public

Switch to the right version of the PGI compiler:

module unload openmpi/1.3.2/pgi-8.0 compilers/pgi/8.0

module load compilers/pgi/10.2 openmpi/1.4.3/pgi-10.2

To set up the environment:

cd $HOME/public/itm-grid/f90

source setup.csh

2.2.3.3.2. Compile & run examples

2d structured grid write example Source file is at:

src/examples/itm_grid_example1_2dstructured_servicelibrary.f90

Compile:

make depend

make $OBJECTCODE/itm_grid_example1_2dstructured_servicelibrary.exe

Run:

$OBJECTCODE/itm_grid_example1_2dstructured_servicelibrary.exe

2d structured grid read example Source file is at:

src/examples/itm_grid_example1_2dstructured_read.f90

Compile:

make $OBJECTCODE/itm_grid_example1_2dstructured_read.exe

Run:

$OBJECTCODE/itm_grid_example1_2dstructured_read.exe

2.2.3.3.3. Visualize

To visualize the data written by the example program

~klingshi/bin/itm-grid/ualconnector -s 9001,1,0.0 -c edge

To visualize a more complex dataset

~klingshi/bin/itm-grid/ualconnector -s 17151,899,1000.0 -c edge -u klingshi -t aug

Combining data from two CPOs:

~klingshi/bin/itm-grid/ualconnector -s 17151,898,1000.0 -c edge -s 17151,899,1000.0 -c edge -u klingshi -t aug